I picked up a cheap charging cable for my Baofeng UV-9S. (https://www.amazon.com/gp/product/B07TSDSQ4Z/). It .. well, it works.

But it messes up operating my radios! I heard super strong interference on my HF receiver and my VHF receivers.

So, let's take a look. I setup a little antenna in my shack. The baofeng was about 6ft away.

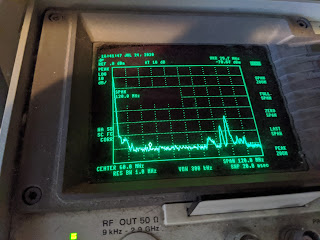

Here's DC to 120MHz. Those peaks to the right? Broadcast FM. The marker is at 28.5MHz.

Ok, let's plug in the baofeng and charger.

Ok, look at that noise. Ugh. That's unfun.

What about VHF? Let's look at that. 100-300MHz.

Ok, that's expected too. I think that's digital TV or something in there. Ok, now, let's plug in the charger, without it charging..

Whaaaaaaaaattttttt oh wait. Yeah, this is likely an unshielded buck converter and it's unloaded. Ok, let's load it up.

Whaaaaaa oh ok. Well that explains everything.

Let's pull it open:

Yup. A buck converter going from 5v to 9v; no shielding, no shielded power cable and no ground plane on the PCB. This is just amazing. The 3ft charge cable is basically an antenna. "Unintentional radiator" indeed.

So - even with a ferrite on the cable, it isn't quiet.

It's quiet at 28MHz now so I can operate on the 10m band with it charging, but this doesn't help at all at VHF.

Ew.

Friday, July 24, 2020

Wednesday, July 15, 2020

Fixing up ath_rate_sample to actually work well with 11n

Way back in 2011 when I was working on FreeBSD's Atheros 802.11n support I needed to go and teach some rate control code about 802.11n MCS rates. (As a side note, the other FreeBSD wifi hackers and I at the time taught wlan_amrr - the AMRR rate control in net80211 - about basic MCS support too, and fixing that will be the subject of a later post.)

The initial hacks I did to ath_rate_sample made it kind of do MCS rates OK, but it certainly wasn't great. To understand why then and what I've done now, it's best to go for a little trip down journey lane - the initial sample rate control algorithm by John Bicket. You can find a copy of the paper he wrote here - https://pdos.csail.mit.edu/papers/jbicket-ms.pdf .

Now, sample didn't try to optimise maximum throughput. Instead, it attempts to optimise for minimum airtime to get the job done, and also attempted to minimise the time spent sampling rates that had a low probability of working. Note this was all done circa 2005 - at the time the other popular rate control methods tried to maintain the highest PHY rate that met some basic success rate (eg packet loss, bit error rate, etc, etc.) The initial implementation in FreeBSD also included multiple packet size bins - 250 and 1600 bytes - to allow rate selection based on packet length.

However, it made some assumptions about rates that don't quite hold in the 802.11n MCS world. Notably, it didn't take the PHY bitrate into account when comparing rates. It mostly assumed that going up in rate code - except between CCK and OFDM rates - meant it was faster. Now, this is true for 11b, 11g and 11a rates - again except when you transition between 11b and 11g rates - but this definitely doesn't hold true in the 802.11n MCS rate world. Yes, between MCS0 to MCS7 the PHY bitrate goes up, but then MCS8 is MCS0 times two streams, and MCS16 is MCS0 times three streams.

So my 2011/2012 just did the minimum hacks to choose /some/ MCS rates. It didn't take the length of aggregates into account; it just used the length of the first packet in the aggregate. Very suboptimal, but it got MCS rates going.

Now fast-forward to 2020. This works fine if you're close to the other end, but it's very terrible if you're at the fringes of acceptable behaviour. My access points at home are not well located and thus I'm reproducing this behaviour very often - so I decided to fix it.

First up - packet length. I had to do some work to figure out how much data was in the transmit queue for a given node and TID. (Think "QoS category.") The amount of data in the queue wasn't good enough - chances are we couldn't transmit all of it because of 802.11 state (block-ack window, management traffic, sleep state, etc.) So I needed a quick way to query the amount of traffic in the queue taking into account 802.11 state. That .. ended up being a walk of each packet in the software queue for that node/TID list until we hit our limit, but for now that'll do.

So then I can call ath_rate_lookup() to get a rate control schedule knowing how long a packet may be. But depending up on the rate it returns, the amount of data that may be transmitted could be less - there's a 4ms limit on 802.11n aggregates, so at lower MCS rates you end up only sending much smaller frames (like 3KB at the slowest rate.) So I needed a way to return how many bytes to form an aggregate for as well as the rate. That informed the A-MPDU formation routine how much data it could queue in the aggregate for the given rate.

I also stored that away to use when completing the transmit, just to line things up OK.

Ok, so now I'm able to make rate control decisions based on how much data needs to be sent. ath_rate_sample still only worked with 250 and 1600 byte packets. So, I extended that out to 65536 bytes in mostly-powers-of-two values. This worked pretty well right out of the box, but the rate control process was still making pretty trash decisions.

The next bit is all "statistics". The decisions that ath_rate_sample makes depend upon accurate estimations of how long packet transmissions took. I found that a lot of the logic was drastically over-compensating for failures by accounting a LOT more time for failures at each attempted rate, rather than only accounting how much time failed at that rate. Here's two examples:

By and large, I pretty accurately nailed making sure that failed transmit rates account for THEIR failures, not the failures of other rates in the schedule. It was super important for MCS rates because mis-accounting failures across the 24-odd rates you can choose in 3-stream transmit can have pretty disasterous effects on throughput - channel conditions change super frequently and you don't want to penalise things for far, far too long and it take a lot of subsequent successful samples just to try using that rate again.

So that was the statistics side done.

Next up - choices.

Choices was a bit less problematic to fix. My earlier hacks mostly just made it possible to choose MCS rates but it didn't really take into account their behaviour. When you're doing 11a/11g OFDM rates, you know that you go in lock-step from 6, 12, 18, 24, 36, 48, 54MB, and if a rate starts failing the higher rate will likely also fail. However, MCS rates are different - the difference between MCS0 (1/2 BPSK, 1 stream) and MCS8 (1/2 BPSK, 2 streams) is only a couple dB of extra required signal strength. So given a rate, you want to sample at MCS rates around it but also ACROSS streams. So I mostly had to make sure that if I was at say MCS3, I'd also test MCS2 and MCS4, but I'd also test MCS10/11/12 (the 2-stream versions of MCS2/3/4) and maybe MCS18/19/20 for 3-stream. I also shouldn't really bother testing too high up the MCS chain if I'm at a lower MCS rate - there's no guarantee that MCS7 is going to work (5/6 QAM64 - fast but needs a pretty clean channel) if I'm doing ok at MCS2. So, I just went to make sure that the sampling logic wouldn't try all the MCS rates when operating at a given MCS rate. It works pretty well - sampling will try a couple MCS rates either side to see if the average transmit time for that rate is higher or lower, and then it'll bump it up or down to minimise said average transmit time.

However, the one gotcha - packet loss and A-MPDU.

ath_rate_sample was based on single frames, not aggregates. So the concept of average transmit time assumed that the data either got there or it didn't. But, with 802.11n A-MPDU aggregation we can have the higher rates succeed at transmitting SOMETHING - meaning that the average transmit time and long retry failure counts look great - but most of the frames in the A-MPDU are dropped. That means low throughput and more actual airtime being used.

When I did this initial work in 2011/2012 I noted this, so I kept an EWMA of the packet loss both of single frames and aggregates. I wouldn't choose higher rates whose EWMA was outside of a couple percent of the current best rate. It didn't matter how good it looked at the long retry view - if only 5% of sub-frames were ACKed, I needed a quick way to dismiss that. The EWMA logic worked pretty well there and only needed a bit of tweaking.

A few things stand out after testing:

The initial hacks I did to ath_rate_sample made it kind of do MCS rates OK, but it certainly wasn't great. To understand why then and what I've done now, it's best to go for a little trip down journey lane - the initial sample rate control algorithm by John Bicket. You can find a copy of the paper he wrote here - https://pdos.csail.mit.edu/papers/jbicket-ms.pdf .

Now, sample didn't try to optimise maximum throughput. Instead, it attempts to optimise for minimum airtime to get the job done, and also attempted to minimise the time spent sampling rates that had a low probability of working. Note this was all done circa 2005 - at the time the other popular rate control methods tried to maintain the highest PHY rate that met some basic success rate (eg packet loss, bit error rate, etc, etc.) The initial implementation in FreeBSD also included multiple packet size bins - 250 and 1600 bytes - to allow rate selection based on packet length.

However, it made some assumptions about rates that don't quite hold in the 802.11n MCS world. Notably, it didn't take the PHY bitrate into account when comparing rates. It mostly assumed that going up in rate code - except between CCK and OFDM rates - meant it was faster. Now, this is true for 11b, 11g and 11a rates - again except when you transition between 11b and 11g rates - but this definitely doesn't hold true in the 802.11n MCS rate world. Yes, between MCS0 to MCS7 the PHY bitrate goes up, but then MCS8 is MCS0 times two streams, and MCS16 is MCS0 times three streams.

So my 2011/2012 just did the minimum hacks to choose /some/ MCS rates. It didn't take the length of aggregates into account; it just used the length of the first packet in the aggregate. Very suboptimal, but it got MCS rates going.

Now fast-forward to 2020. This works fine if you're close to the other end, but it's very terrible if you're at the fringes of acceptable behaviour. My access points at home are not well located and thus I'm reproducing this behaviour very often - so I decided to fix it.

First up - packet length. I had to do some work to figure out how much data was in the transmit queue for a given node and TID. (Think "QoS category.") The amount of data in the queue wasn't good enough - chances are we couldn't transmit all of it because of 802.11 state (block-ack window, management traffic, sleep state, etc.) So I needed a quick way to query the amount of traffic in the queue taking into account 802.11 state. That .. ended up being a walk of each packet in the software queue for that node/TID list until we hit our limit, but for now that'll do.

So then I can call ath_rate_lookup() to get a rate control schedule knowing how long a packet may be. But depending up on the rate it returns, the amount of data that may be transmitted could be less - there's a 4ms limit on 802.11n aggregates, so at lower MCS rates you end up only sending much smaller frames (like 3KB at the slowest rate.) So I needed a way to return how many bytes to form an aggregate for as well as the rate. That informed the A-MPDU formation routine how much data it could queue in the aggregate for the given rate.

I also stored that away to use when completing the transmit, just to line things up OK.

Ok, so now I'm able to make rate control decisions based on how much data needs to be sent. ath_rate_sample still only worked with 250 and 1600 byte packets. So, I extended that out to 65536 bytes in mostly-powers-of-two values. This worked pretty well right out of the box, but the rate control process was still making pretty trash decisions.

The next bit is all "statistics". The decisions that ath_rate_sample makes depend upon accurate estimations of how long packet transmissions took. I found that a lot of the logic was drastically over-compensating for failures by accounting a LOT more time for failures at each attempted rate, rather than only accounting how much time failed at that rate. Here's two examples:

- If a rate failed, then all the other rates would get failure accounted for the whole length of the transmission to that point. I changed it to only account for failures for that rate - so if three out of four rates failed, each failed rate would only get their individual time accounted to that rate, rather than everything.

- Short (RTS/CTS) and long (no-ACK) retries were being accounted incorrectly. If 10 short retries occured, then the maximum failed transmission for that rate can't be 10 times the "it happened" long retry style packet accounting. It's a short retry; the only thing that could differ is the rate that RTS/CTS is being exchanged at. Penalising rates because of bursts of short failures was incorrect and I changed that accounting.

By and large, I pretty accurately nailed making sure that failed transmit rates account for THEIR failures, not the failures of other rates in the schedule. It was super important for MCS rates because mis-accounting failures across the 24-odd rates you can choose in 3-stream transmit can have pretty disasterous effects on throughput - channel conditions change super frequently and you don't want to penalise things for far, far too long and it take a lot of subsequent successful samples just to try using that rate again.

So that was the statistics side done.

Next up - choices.

Choices was a bit less problematic to fix. My earlier hacks mostly just made it possible to choose MCS rates but it didn't really take into account their behaviour. When you're doing 11a/11g OFDM rates, you know that you go in lock-step from 6, 12, 18, 24, 36, 48, 54MB, and if a rate starts failing the higher rate will likely also fail. However, MCS rates are different - the difference between MCS0 (1/2 BPSK, 1 stream) and MCS8 (1/2 BPSK, 2 streams) is only a couple dB of extra required signal strength. So given a rate, you want to sample at MCS rates around it but also ACROSS streams. So I mostly had to make sure that if I was at say MCS3, I'd also test MCS2 and MCS4, but I'd also test MCS10/11/12 (the 2-stream versions of MCS2/3/4) and maybe MCS18/19/20 for 3-stream. I also shouldn't really bother testing too high up the MCS chain if I'm at a lower MCS rate - there's no guarantee that MCS7 is going to work (5/6 QAM64 - fast but needs a pretty clean channel) if I'm doing ok at MCS2. So, I just went to make sure that the sampling logic wouldn't try all the MCS rates when operating at a given MCS rate. It works pretty well - sampling will try a couple MCS rates either side to see if the average transmit time for that rate is higher or lower, and then it'll bump it up or down to minimise said average transmit time.

However, the one gotcha - packet loss and A-MPDU.

ath_rate_sample was based on single frames, not aggregates. So the concept of average transmit time assumed that the data either got there or it didn't. But, with 802.11n A-MPDU aggregation we can have the higher rates succeed at transmitting SOMETHING - meaning that the average transmit time and long retry failure counts look great - but most of the frames in the A-MPDU are dropped. That means low throughput and more actual airtime being used.

When I did this initial work in 2011/2012 I noted this, so I kept an EWMA of the packet loss both of single frames and aggregates. I wouldn't choose higher rates whose EWMA was outside of a couple percent of the current best rate. It didn't matter how good it looked at the long retry view - if only 5% of sub-frames were ACKed, I needed a quick way to dismiss that. The EWMA logic worked pretty well there and only needed a bit of tweaking.

A few things stand out after testing:

- For shorter packets, it doesn't matter if it chooses the one, two or three stream rate; the bulk of the airtime is overhead and not data. Ie, the difference between MCS4, MCS12 and MCS20 is any extra training symbols for 2/3 stream rates and a few dB extra signal strength required. So, typically it will alternate between them as they all behave roughly the same.

- For longer packets, the bulk of the airtime starts becoming data, so it begins to choose rates that are obviously providing lower airtime and higher packet success EWMA. MCS12 is the choice for up to 4096 byte aggregates; the higher rates start rapidly dropping off in EWMA. This could be due to a variety of things, but importantly it's optimising things pretty well.

I'm back into the grind of FreeBSD's wireless stack and 802.11ac

hi!

Yes, it's been a while since I posted here and yes, it's been a while since I was actively working on FreeBSD's wireless stack. Life's been .. well, life. I started the ath10k port in 2015. I wasn't expecting it to take 5 years, but here we are. My life has changed quite a lot since 2015 and a lot of the things I was doing in 2015 just stopped being fun for a while.

But the stars have aligned and it's fun again, so here I am.

Here's where things are right now.

First up - if_run. This is the Ralink (now mediatek) 11abgn USB driver for stuff that they made before Mediatek acquired them. A contributor named Ashish Gupta showed up on the #freebsd-wifi IRC channel on efnet to start working on 11n support to if_run and he got it to the point where the basics worked - and I took it and ran with it enough to land 20MHz 11n support. It turns out I had a couple of suitable NICs to test with and, well, it just happened. I'm super happy Ashish came along to get 11n working on another NIC.

The if_run TODO list (which anyone is welcome to contribute to):

At this point the 11g protection kicks in; everyone does RTS/CTS protection and long preamble/slot time kicks in.

For ath10k in particular if you try transmitting a frame without a node in firmware the whole transmit path just hangs. Whoops. So I've fixed that so we can't queue a frame if the firmware doesn't know about the node but ...

Yes, it's been a while since I posted here and yes, it's been a while since I was actively working on FreeBSD's wireless stack. Life's been .. well, life. I started the ath10k port in 2015. I wasn't expecting it to take 5 years, but here we are. My life has changed quite a lot since 2015 and a lot of the things I was doing in 2015 just stopped being fun for a while.

But the stars have aligned and it's fun again, so here I am.

Here's where things are right now.

First up - if_run. This is the Ralink (now mediatek) 11abgn USB driver for stuff that they made before Mediatek acquired them. A contributor named Ashish Gupta showed up on the #freebsd-wifi IRC channel on efnet to start working on 11n support to if_run and he got it to the point where the basics worked - and I took it and ran with it enough to land 20MHz 11n support. It turns out I had a couple of suitable NICs to test with and, well, it just happened. I'm super happy Ashish came along to get 11n working on another NIC.

The if_run TODO list (which anyone is welcome to contribute to):

- Ashish is looking at 40MHz wide channel support right now;

- Short and long-GI support would be good to have;

- we need to get 11n TX aggregation working via the firmware interface - it looks like the Linux driver has all the bits we need and it doesn't need retransmission support in net80211. The firmware will do it all if we set up the descriptors correctly.

net80211 work

Next up - net80211. So, net80211 has basic 11ac bits, even if people think it's not there. It doesn't know about MU-MIMO streams yet but it'll be a basic 11ac AP and STA if the driver and regulatory domain supports it.

However, as I implement more of the ath10k port, I find more and more missing bits that really need to be in net80211.

A-MPDU / A-MSDU de-encapsulation

The hardware does A-MPDU and A-MSDU de-encapsulation in hardware/firmware, pushing up individual decrypted and de-encapsulated frames to the driver. It supports native wifi and 802.3 (ethernet) encapsulation, and right now we only support native wifi. (Note - net80211 supports 802.3 as well; I'll try to get that going once the driver lands.)

I added support to handle decryption offload with the ath10k supplied A-MPDU/A-MSDU frames (where there's no PN/MIC at all, it's all done in firmware/hardware!) so we could get SOME traffic. However, receive throughput just plainly sucked when I last poked at this. I also added A-MSDU offload support where we wouldn't drop the A-MSDU frames with the same receive 802.11 sequence number. However...

It turns out that my mac was doing A-MSDU in A-MPDU in 11ac, and the net80211 receive A-MPDU reordering was faithfully dropping all A-MSDU frames with the same receive 802.11 sequence number. So TCP would just see massive packet loss and drop the throughput in a huge way. Implementing this feature requires buffering all A-MSDU frames in an A-MPDU sub-frame in the reordering queue rather than tossing them, and then reordering them as if they were a single frame.

So I modified the receive reordering logic to reorder queues of mbufs instead of mbufs, and patched things to allow queuing multiple mbufs as long as they were appropriately stamped as being A-MSDUs in a single A-MPDU subframe .. and now the receive traffic rate is where it should be (> 300mbit UDP/TCP.) Phew.

U-APSD support

I didn't want to implement full U-APSD support in the Atheros 11abgn driver because it requires a lot of driver work to get it right, but the actual U-APSD negotiation support in net80211 is significantly easier. If the NIC supports U-APSD offload (like ath10k does) then I just have to populate the WME QoS fields appropriately and call into the driver to notify them about U-APSD changes.

Right now net80211 doesn't support the ADD-TS / DEL-TS methods for clients requesting explicit QoS requirements.

Migrating more options to per-VAP state

There are a bunch of net80211 state which was still global rather than per-VAP. It makes sense in the old world - NICs that do things in the driver or net80211 side are driven in software, not in firmware, so things like "the current channel", "short/long preamble", etc are global state. However the later NICs that offload various things into firmware can now begin to do interesting things like background channel switching for scan, background channel switching between STA and P2P-AP / P2P-STA. So a lot of state should be kept per-VAP rather than globally so the "right" flags and IEs are set for a given VAP.

I've started migrating this state into per-VAP fields rather than global, but it showed a second shortcoming - because it was global, we weren't explicitly tracking these things per-channel. Ok, this needs a bit more explanation.

Say you're on a 2GHz channel and you need to determine whether you care about 11n, 11g or 11b clients. If you're only seeing and servicing 11n clients then you should be using the short slot time, short preamble and not require RTS/CTS protection to interoperate with pre-11n clients.

But then an 11g client shows up.

The 11g client doesn't need to interoperate with 11b, only 11n - so it doesn't need RTS/CTS. It can use short preamble and short slot time still. But the 11n client need to interoperate, so it needs to switch protection mode into legacy - and it will do RTS/CTS protection.

But then, an 11b client shows up.

At this point the 11g protection kicks in; everyone does RTS/CTS protection and long preamble/slot time kicks in.

Now - is this a property of a VAP, or of a channel? Technically speaking, it's the property of a channel. If any VAP on that channel sees an 11b or 11g client, ALL VAPs need to transition to update protection mode.

I migrated all of this to be per-VAP, but I kept the global state for literally all the drivers that currently consume it. The ath10k driver now uses the per-VAP state for the above, greatly simplifying things (and finishing TODO items in the driver!)

ath10k changes

And yes, I've been hacking on ath10k too.

Locking issues

I've had a bunch of feedback and pull requests from Bjorn and Geramy pointing out lock ordering / deadlock issues in ath10k. I'm slowly working through them; the straight conversion from Linux to FreeBSD showed the differences in our locking and how/when driver threads run. I will rant about this another day.

Encryption key programming

The encryption key programming is programmed using firmware calls, but net80211 currently expects them to be done synchronously. We can't sleep in the net80211 crypto key updates without changing net80211's locks to all be SX locks (and I honestly think that's a bad solution that papers over non-asynchronous code that honestly should just be made asynchronous.) Anyway, so it and the node updates are done using deferred calls - but this required me to take complete copies of the encryption key contents. It turns out net80211 can pretty quickly recycle the key contents - including the key that is hiding inside the ieee80211_node. This fixed up the key reprogramming and deletion - it was sometimes sending garbage to the firmware. Whoops.

What's next?

So what's next? Well, I want to land the ath10k driver! There are still a whole bunch of things to do in both net80211 and the driver before I can do this.

Add 802.11ac channel entries to regdomain.xml

Yes, I added it - but only for FCC. I didn't add them for all the other regulatory domain codes. It's a lot of work because of how this file is implemented and I'd love help here.

Add MU-MIMO group notification

I'd like to make sure that we can at least support associating to a MU-MIMO AP. I think ath10k does it in firmware but we need to support the IE notifications.

Block traffic from being transmitted during a node creation or key update

Right now net80211 will transmit frames right after adding a node or sending a key update - it assumes the driver is completing it before returning. For software driven NICs like the pre-11ac Atheros chips this holds true, but for everything USB and newer firmware based devices this definitely doesn't hold.

For ath10k in particular if you try transmitting a frame without a node in firmware the whole transmit path just hangs. Whoops. So I've fixed that so we can't queue a frame if the firmware doesn't know about the node but ...

... net80211 will send the association responses in hostap mode once the node is created. This means the first association response doesn't make it to the associating client. Since net80211 doesn't yet do this traffic buffering, I'll do it in ath10k- I'll buffer frames during a key update and during node addition/deletion to make sure that nothing is sent OR dropped.

Clean up the Linux-y bits

There's a bunch of dead code which we don't need or don't use; as well as some compatibility bits that define Linux mac80211/nl80211 bits that should live in net80211. I'm going to turn these into net80211 methods and remove the Linux-y bits from ath10k. Bjorn's work to make linuxkpi wifi shims can then just translate the calls to the net80211 API bits I'll add, rather than having to roll full wifi methods inside linuxkpi.

To wrap up ..

.. job changes, relationship changes, having kids, getting a green card, buying a house and paying off old debts from your old hosting company can throw a spanner in the life machine. On the plus side, hacking on FreeBSD and wifi support are fun again and I'm actually able to sleep through the night once more, so ... here goes!

If you're interested in helping out, I've been updating the net80211/driver TODO list here: https://wiki.freebsd.org/WiFi/TodoStuff . I'd love some help, even on the small things!

Subscribe to:

Comments (Atom)